Mapping and managing headlands, autonomously

Field Boundary Mapping System (patent pending)

Introduction

Mojow Autonomous Solutions Inc. (Mojow) is developing a farm field mapping system that allows an autonomous tractor to identify, predict, decide, and then execute the navigation of headland passes. It does this by following field boundaries, while on the go, in real-time, and without a human in the cab. The system contains sensors, software, and computing power necessary to process the sensor data to build and maintain field boundary maps in real time. Existing maps are stored in the cloud and made available through EYEBOX™ mobile application, so the farmer can access and utilize their maps anywhere, anytime.

In this article, we start by discussing current practices available for field mapping in broadacre farming. We continue by presenting Mojow's proposed solution to simplify and improve current practices. Afterwards, we introduce the key features of the EYEBOX mapping system followed by example videos from field testing results.

Manual method of creating field boundaries

The most common method of creating field boundaries for autonomous equipment relies heavily on RTK corrected GPS being available 100% of the time. These methods record GPS coordinates of the tractor while a human operator manually drives a headland pass around the entire field perimeter. Once the headland pass is completed, the data is post processed, and the field boundary map is complete. This boundary map is then used for creating AB lines for completing the field coverage task. This method of mapping is simple for a human operator to complete; however, a number of major issues arise that makes this practice inefficient and impractical for farming operations. They are listed below:

1) Changing field boundaries

For many reasons, field boundaries do not remain fixed throughout a farming season. Heavy rain events or persistent wet weather conditions during spring can create sloughs or wet spots in the field that need to be avoided during spring operations, but those same areas may dry up and require adjustments for spraying or heavy harrowing in fall.

Another common example is when a tree falls and lands inside a cultivated portion of a field. It's vital the tree is avoided to prevent equipment damage. Relying on maps prepared previously from another field application may no longer be dependable for an autonomous tractor if it does not have real-time obstacle sensing and avoidance.

Field boundaries can fluctuate or change very slightly for various reasons. Imagine a human operator who completes the seeding or planting operation every season. This manual driving action can slowly cause field boundaries to shift inwards over time (over multiple crop years) causing the field to become slightly smaller over time. The generally accepted fix for this condition is to work or till the ground around a field boundary, to push boundaries outwards for the next field operation. This continuous and slight changing of field boundaries has the potential to create havoc for autonomous farming equipment.

2) Time consuming

The act of manually mapping field boundaries will take a significant amount of time, often from an experienced operator or manager. Depending on the shape of the field and irregularities, driving headlands can account for more than 10% of a human operator's time within the tractor cab.

The process of manual mapping or remapping can become difficult and costly to maintain. For example, if only a small corner of your field boundaries has changed, you most likely have to map your whole field again. There are few tools that allow for a farmer to edit existing boundaries.

The computational power on an autonomous tractor today makes it possible to not only address these challenges but it also makes it much easier for human operators to map fields.

EYEBOX field mapping system (patent pending)

Introducing the Mojow solution to solve the farm field mapping problem - EYEBOX. A simple-to-use system that employs computer vision technology to bring autonomous farming closer to your farm with our exclusive field mapping system.

Sensors

Our mapping solution utilizes sensors that are readily available such as cameras, GPS, IMU, and Lidar. Typically, EYEBOX has all these sensors onboard, and they may be used in different configurations depending upon the needs of the farmer. Specific tasks and complex mapping may require more sensors, and with EYEBOX we have the flexibility and power to get the job done.

In this example of field mapping, we have evaluated two separate and different configurations:

- Stereo camera and GPS

- Lidar, RGB camera and GPS

Both of these methods have been field tested; they both are equally reliable. For now, we'll focus on the first configuration (stereo camera and GPS) and where appropriate, we mention where we can substitute or complement the system with the Lidar.

In this configuration, we use multiple cameras from different viewpoints. Typically for field boundary mapping applications, we are interested in the front view of the tractor with greater than or equal to 180 degrees field of view. Below are a few images from different cameras on the tractor.

Multiple cameras are mounted on the front of the tractor, each with a slightly overlapping field of view, similar to how we humans see things (binocular vision). EYEBOX has an advantage of seeing more, all at once, and being able to process and take action. It sees on both sides of the tractor, which is especially important while performing short turning radius maneuvers along headlands.

We use stereo cameras to capture the images as well as depth maps associated with each image. The depth maps allow us to estimate the distance of any point in the image relative to the camera. We use these maps to determine where the field boundary is relative to the tractor.

Note that in the second configuration, Lidar provides depth information. We utilize GPS within EYEBOX to inform the mapping system where the tractor is relative to an object in the field. The GPS acts as our global positioning system whereas the cameras provide precise local positioning of objects within its field of view. Together, they help us build accurate local and global maps of farm fields.

Perception

In this section we describe how all the data gets processed within EYEBOX to create different representations of the farm, which is used to build local and global maps.

Our perception system is responsible for processing incoming data from all the sensors and providing compact and different representations of objects of interest around the tractor. We use deep learning models to detect field boundaries in color images from the cameras on the tractor. This perception system processes images from multiple cameras with different field of views from the tractor and provides the results to the field mapping system.

High-quality datasets from many different crop types have been used to train and test many different deep learning models. These training and tests have been crucial in finding the best model that satisfies our strict field mapping requirements. Our datasets contain many examples of different types of field boundaries seen in broadacre farming. Mojow's current field boundary detection model is based on more than 130,000 annotated images (and counting). You can find a few examples of annotated field boundaries from our datasets below.

We are seeing the performance and reliability of the system improve after multiple retraining with new incoming data that we continue to annotate. We are adding support for detecting any type of field boundary in broadacre farming to make sure our mapping system encounters no surprises.

The following video shows the output of the perception system for different cameras and different viewpoints.

left camera perception

front camera perception

Our perception system today can detect field from non-field in images. So if there is a truck, quad, or any other object in the field, EYEBOX will see it and will estimate its boundaries so that it can be mapped spatially.

Once these boundaries are detected in image frames, using the depth map from the stereo cameras, the perception system estimates the location of each point on the detected boundary relative to the tractor. This is our local mapping system that allows the tractor to navigate locally without relying on GPS. In summary, the perception system does the followings:

- Processes all the incoming images from the cameras

- Detects field and non-field boundaries in each image

- Estimates the location of each point on detected boundaries relative to the tractor

Local mapping

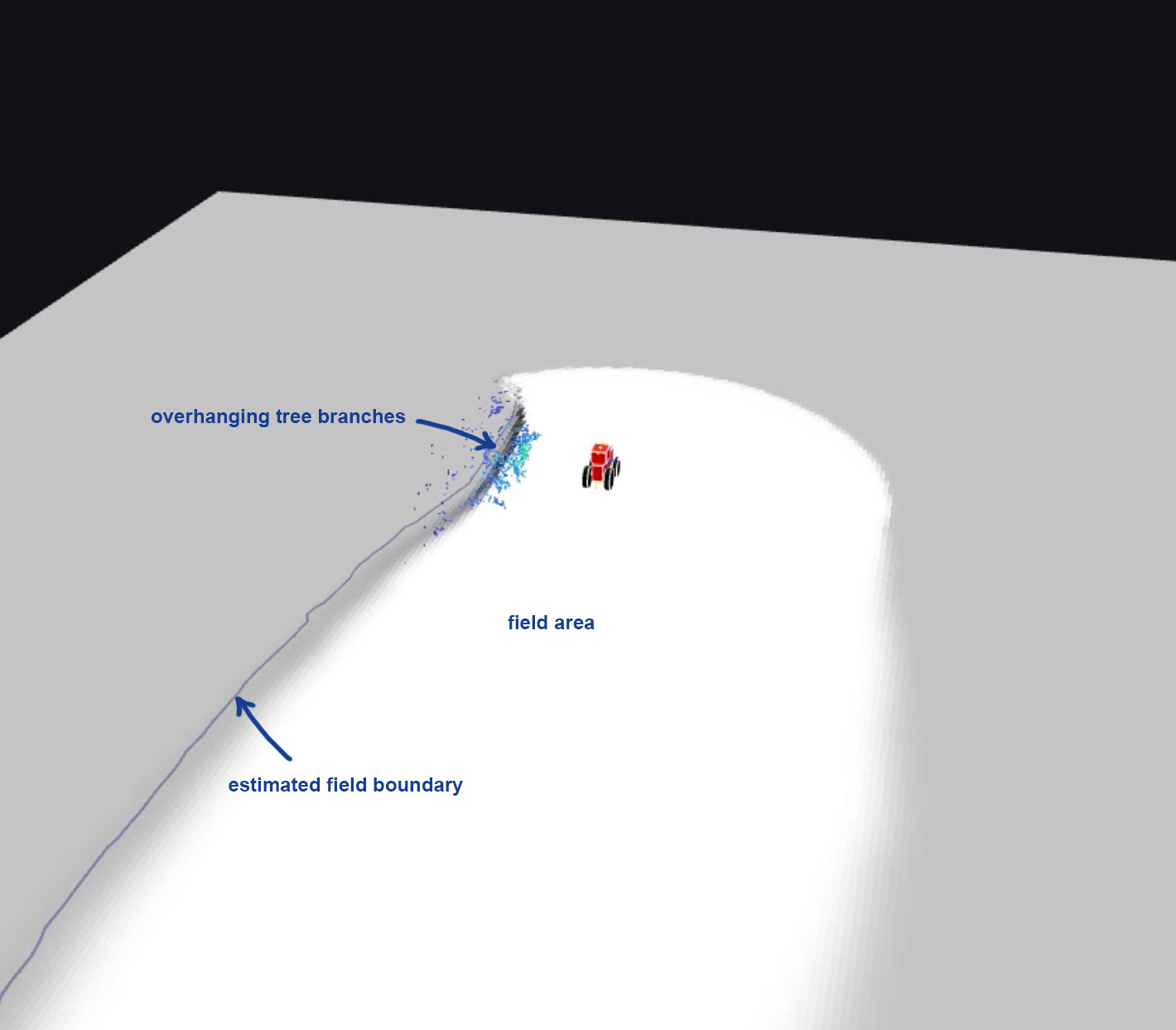

We need to understand the operating environment around the tractor before moving the tractor further. For example, we want to know if there are any obstacles within the tractors intended travel path (or the attached implement). There are many different use cases where you need a system for local maneuvers without relying on GPS entirely. EYEBOX creates local maps that are compact 3-dimensional digital representations of the tractor's surroundings. The detected field boundaries from the perception system are used to build the local maps.

The following figure is an image of a sample local map. In this figure, you can see field area, non-field area and some overhanging tree branches on the field boundary. These maps are used for any local navigation task, such as operating a headland pass or driving around an obstacle (e.g., truck, tree, etc.).

The great thing about local maps is that all the location measurements are relative to the tractor, meaning that navigating in local maps doesn't require GPS. This feature makes EYEBOX unique and enables a lot of functionalities that weren't possible on tractors before. The local maps are highly accurate and allow us to perform typical tasks as good as and sometimes even better than human operators.

Some of the many use cases of local maps on EYEBOX are:

- Unplanned obstacle avoidance

- Headland navigation

- Navigating within work yards, roadways, and field entrances

- Anywhere where GPS signal may be restricted (operating adjacent to large trees or inside buildings)

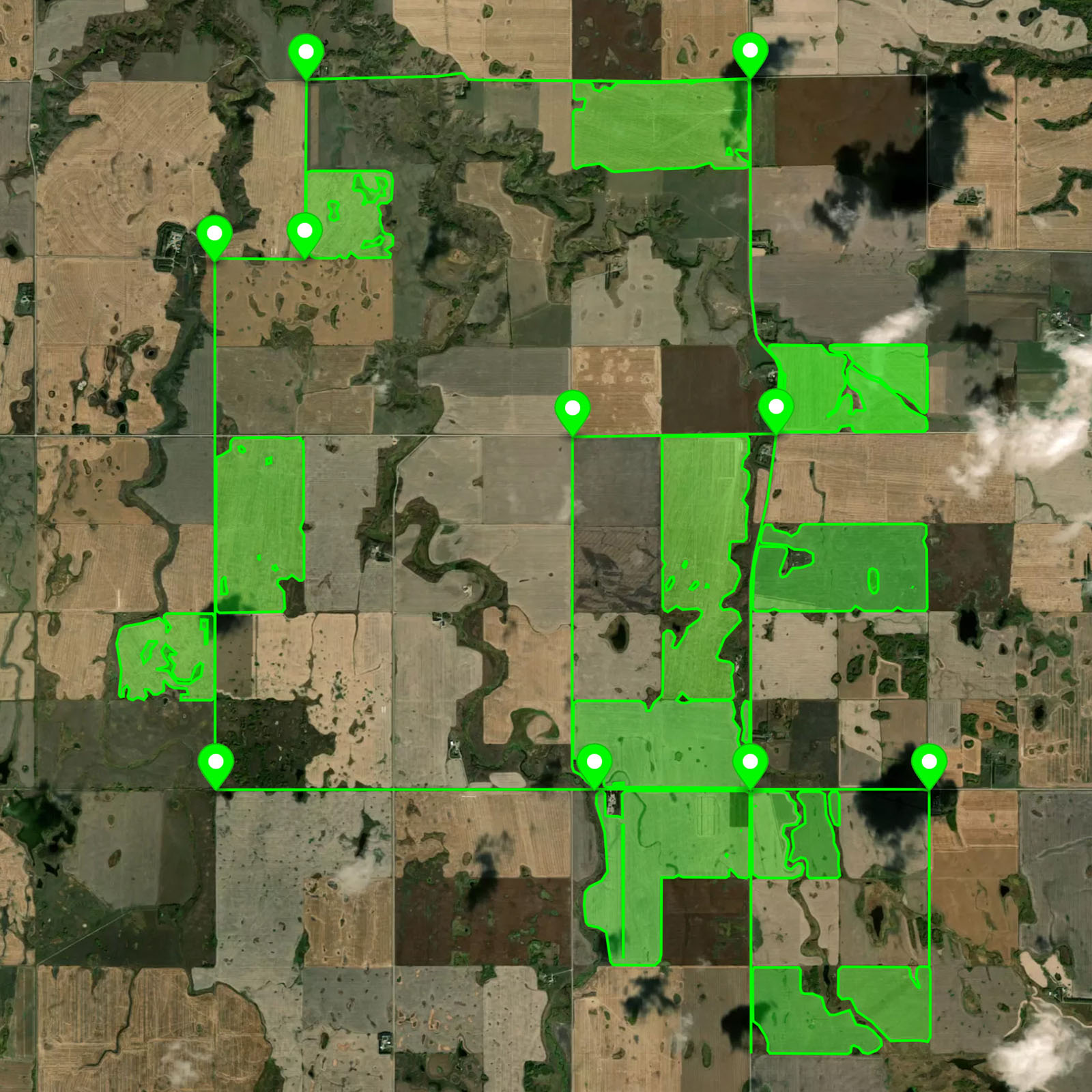

Global maps

As much as we love local maps, global maps are equally important in autonomous farming operations. Global maps provide a bird's eye view of the farming operation and they include all fields, road networks, field entrances, farm yards, and anything else of interest to the farmer that EYEBOX can see. The following figure on the left shows a simple example of a global map representing fields, road network connecting the fields and road intersections. The figure on the right shows EYEBOX™ mapping a field while operating field headland.

Global farm maps provide EYEBOX with the information necessary to pre-plan tasks like field coverage today, but also more complex tasks in the future like field coverage that takes place in more than one field or field segment.

Narrow entrances are static and precise and they require unique navigation and control algorithms with intermediate task-specific control restraints that allow the autonomous tractor to successfully navigate. Depending on a field's characteristics, entrances may not be restricted by fences or ditches and may span more than a half mile long in some cases when roadways run adjacent to one side of a field. Wide, flat access allows for the autonomous tractor to have many options for entrance and exit locations. They are unsolidified and have the ability to change over time. Depending on the geographical location of the next task, it will save time by planning and executing the most efficient entrance or exit location.

EYEBOX mapping system takes care of any changes necessary to the global maps over time. It keeps a record of the changes. Every time the tractor drives, it detects changes to existing boundaries and alters them if necessary. This solves the ongoing maintenance issues with today's practices for farm field mapping.

Navigation

The autonomous tractor now has everything it needs for navigation tasks as the system can now determine the desired path of travel from the vehicle's current location, to a designated target (based on information from Global and Local Maps).

EYEBOX navigation is responsible for the manipulation of the tractors lower level controls to achieve the desired results. It is responsible for controlling transmission gear, engine rpm, steering, braking, hydraulic systems, etc. The navigation system can be broken down into two main components:

- Planning

- Control

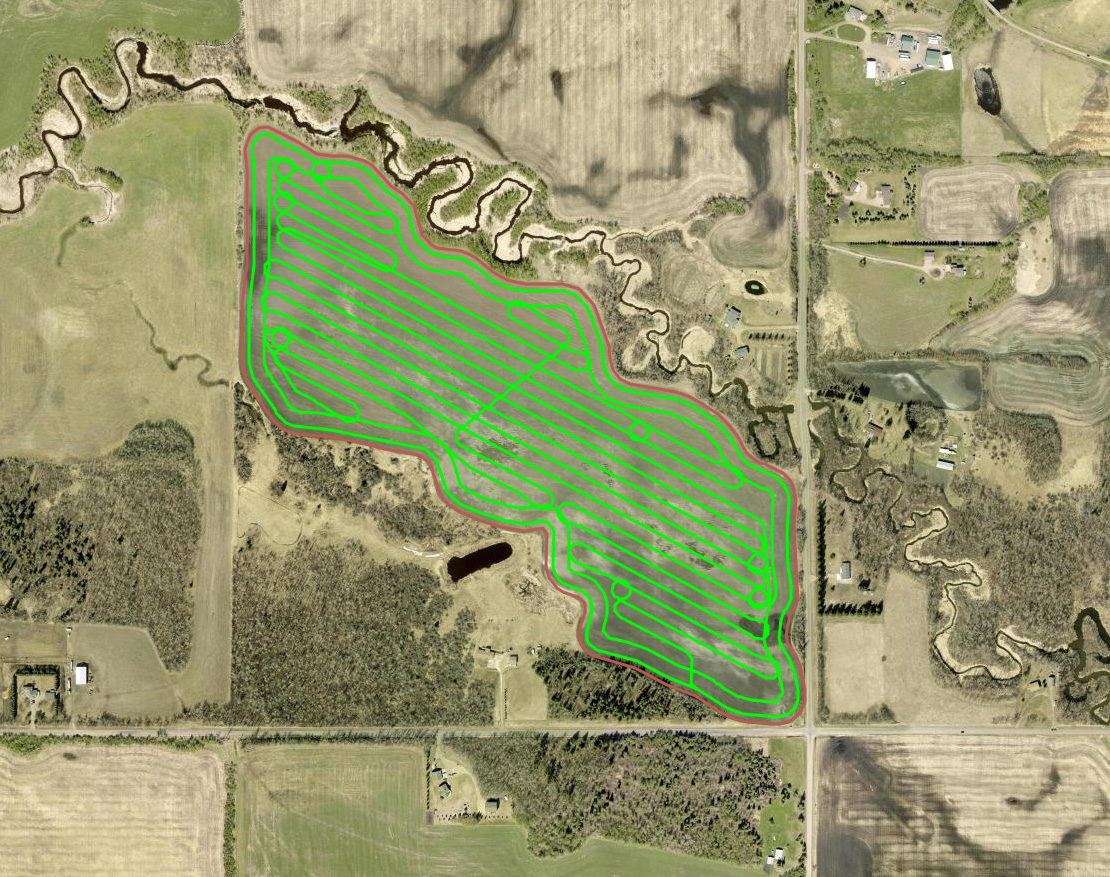

Planning

Planning is a specific sequence of actions or states the tractor needs to follow to reach a certain goal defined by the farmer. High level goals are determined by the farmer using the EYEBOX mobile application.

The actual planning task is handled automatically by EYEBOX. For example, the farmer commands the EYEBOX to complete a coverage mission for a specific field. This command initiates a planning task in the navigation system that uses the latest real-time local and global maps to generate a series of waypoints for the tractor to follow as shown in the following figure.

EYEBOX first plans a global path based on the global maps and then uses the local map with the latest information from the field to adjust the path if necessary. The global planning happens infrequently whereas local planning runs in real-time and deals with any changes to the environment around the tractor. Both global and local planning components work together to get the job done according to the goals defined by the farmer.

Control

Control is the last step of the process. Once all the systems are ready for moving the tractor, EYEBOX looks at the latest plans from the planning component and starts following the plan by commanding the tractor's transmission, engine, steering and hydraulic systems.

In the EYEBOX mobile application the user has full control over the machine including:

- Speed setpoint

- Hydraulic remote outputs

- Adjusting AB heading

- Starting, stopping, pausing and resuming tasks

- Diagnostics

Within the farm field mapping system, the control component is responsible for accurately driving the tractor and the attached implement along the planned path in the local map. The control system continuously commands steering, transmission and engine systems with optimal inputs to smoothly operate the headland pass.

Summary

All the components introduced in this article work together in real-time to autonomously operate the headland pass as well as create and update farm field boundary maps. We also demonstrated how our proposed solution resolves issues with the current field mapping practices in broadacre farming.

The following video sums up this article by showing how all these components work together to make field boundary mapping and operating headland passes easy for the human operator.